AI pricing studies: Cohere LLM

Steven Forth is CEO at Ibbaka. See his Skill Profile on Ibbaka Talio.

Edward Wong is a Business Development Representative at Ibbaka. See his LinkedIn profile.

OpenAI has taken the world by storm, commanding the spotlight when it comes to large language models (LLMs). Their groundbreaking advancements have captivated the attention of enthusiasts and experts alike. However, they are not alone in this intriguing domain. Other key players like Google (Palm 2), Nvidia (NeMo), and Meta (LLaMA) have also made significant contributions to shaping the landscape. What truly excites us, though, is the emergence of startups as formidable contenders in this field.

Among these rising stars, Cohere stands out. Founded in Toronto, Cohere boasts the expertise of Aidan Gomez, as one of its co-founders. He was a co-author of the influential research paper "Attention is All You Need." This is the research that brought about a revolution in artificial intelligence by introducing the Transformer architecture—the backbone of popular large language models (LLMs) such as OpenAI's GPT-4. Cohere aims to redefine the landscape of enterprise AI.

Cohere offers an important alternative to the LLMs offered by the big hub players. In this post, we look at the use cases that Cohere prices and compare its pricing and positioning to market leader Open.ai.

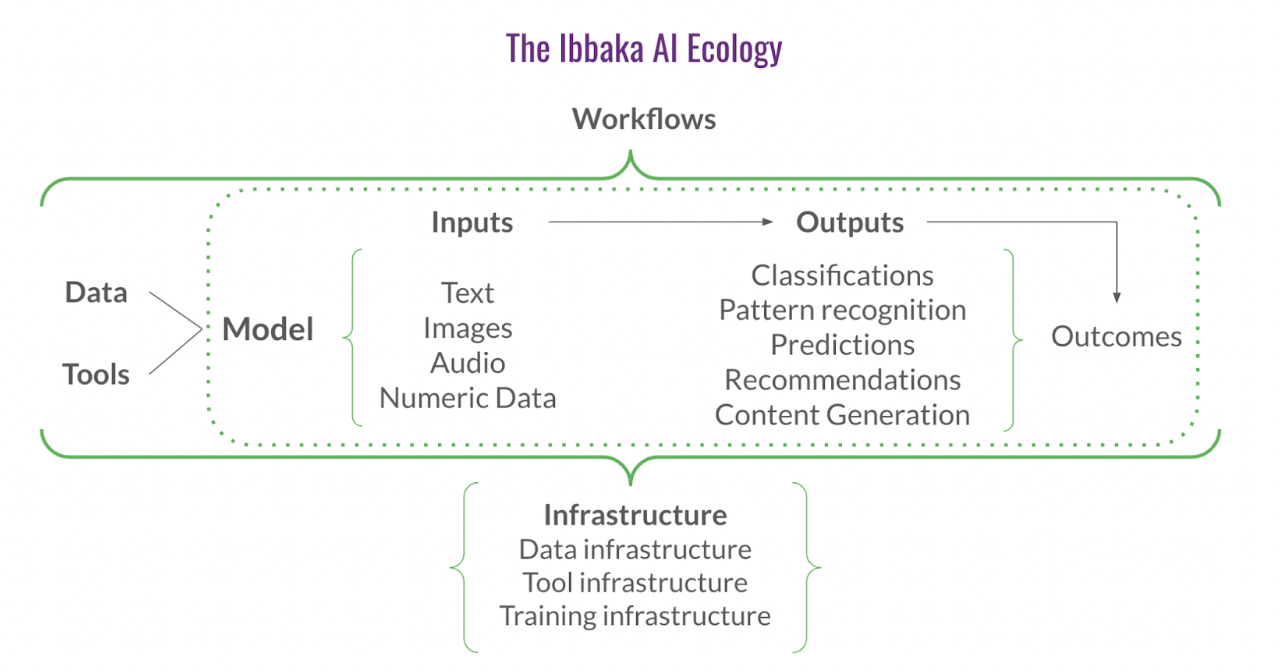

Where does Cohere AI play in the Ibbaka AI Ecology? The key niches in this framework are Data, Tools, Workflow Management, Infrastructure and Models. Cohere provides models.

There are many ways one could price models. Historically, they tended to be priced based on either the amount of data used to train the model or on the size of the model (which incented companies to build bloated models, but then large language models are not exactly svelte). This has changed with the emergence of hosted, prebuilt models, accessed by many people for even more different reasons.

The emerging pattern for large language models seems to be to price based on the number of tokens in the input (prompt) and the number of tokens in the output. The PeakSpan-Ibbaka survey on Net Revenue Retention (you can still take the survey here) has 15 responses from AI companies so far (out of a total of about 250). A variety of pricing metrics are used, with input and output tokens being the most common. (We will be going into a lot more detail when we analyze this data for the final report, which will be released at SaaStr in September in San Francisco.)

The Five Cohere AI offers and how they are priced

Cohere has formal support for five different offers or use cases.

Embed: For ML teams looking to build their own text analysis applications, Embed offers high-performance, accurate embeddings in English and 100+ languages.

Generate: Generate produces unique content for emails, landing pages, product descriptions, and more.

Classify: Classify organizes information for more effective content moderation, analysis, and chatbot experiences.

Summarize: Summarize provides text summarization capabilities at scale.

Rerank (Search Enhancement): Rerank provides a powerful semantic boost to the search quality of any keyword or vector search system without requiring any overhaul or replacement.

There is also a free plan for users to test the platform, allowing them to get hands-on experience before integrating it into their products. The free plan provides a Trial API key with specific rate limits for different endpoints. For example, the Generate and Summarize endpoints have a rate limit of 5000 generation units per month, while the embed and classify endpoints are limited to 100 calls per minute.

A Production API key offers a higher rate limit of 10,000 calls per minute.

Embed API Pricing

Pricing Metric: Per Token

The default pricing is $0.40 per 1 million tokens.

For higher token quantities, a pricing option is available at $0.80 per 1 million tokens. Both options deliver optimal performance for sequences under 512 tokens, and you can generate up to 96 embeddings per API call. Note that one has to pay. a higher price for increased capacity. At this point in time, though, few companies will need to use the higher capacity offer.

Generate API Pricing

Pricing Metric: Per Token

The default pricing is set at $15.0 per 1 million tokens.

The custom pricing option is available at $30.0 per 1 million tokens, providing an alternative for users with larger token quantities or specialized requirements. With both options, input and output tokens are charged equally. Again, one pays more for high volume.

Classify API Pricing

Pricing Metric: Per Classification

The default pricing is set at $0.2 per 1,000 classifications.

Each text input to be classified counts as one classification, and there is no charge for providing examples.

Summarize API Pricing

Pricing Metric: Per Token

The default pricing is set at $15.0 per 1 million tokens.

Charges are based on the overall tokens processed, which includes both input and output tokens.

Rerank Pricing

Pricing Metric: Per Search Unit

The default pricing is set at $1.0 per 1,000 search units.

A single search units is treated as a query and up to 100 documents can be ranked in the search result. Documents exceeding 510 tokens (including the length of search queries) are split into multiple chunks, with each chunk considered search unit for pricing purposes.

Comparing Cohere AI and Open.ai GPT 4

Are Cohere’s offer and Open.ai’s offer comparable? That is a complex question as it will depend on the specifics of the use case. Measuring modes by the number of parameters they contain may not give a lot of insight into how useful they are. That said, GPT-4 is a lot bigger, with about one trillion parameters as compared to only 52 billion or so for Cohere.

For those who want to dive into comparing LLMs, the Centre for Research on Foundation Models at Stanford University has a project on this. The project is known as HELM (Holistic Evaluation of Language Models) and currently compares 36 models on 42 scenarios gathering 57 metrics. Ibbaka will analyse the pricing of each of these models in a future post.

There are some basic differences here between Open.ai and Cohere. Open.ai’s GPT-4 is meant to be a general model that is used as is (Open.ai does have other models that can be trained). Cohere’s approach is quite different. In many cases, the Cohere model will be augmented with additional training data from the user.

Ibbaka, for example, might train Cohere (or any LLM) on things like

Value models

Value stories

Pricing models

Pricing web pages

Discounting policies

Proposals

Contracts

General content on pricing strategy, tactics, negotiations

Design thinking (we see pricing as part of the designed world)

Customer journey maps (especially those containing swimlanes for valley and price)

Category data (who participates in a category, common functionality, value propositions, etc.)

And many other things that shape how one thinks about packaging and pricing

Open.ai does let you train some of its earlier models. These models go by the names Ada, Babbage, Currie and Davinci (evocative names for people in the computer science or innovation fields). The models are differentiated (fenced) by how fast they operate (with Ada being fastest) and how powerful they are (with Babbage being the most powerful). There seems to be a tradeoff between speed and power for these trainable OPen.ai models.

The ability to easily augment and tune models for specific use cases and contexts will be key to success for many practical business questions. Do not assume that an LLM will give you differentiated functionality out of the box.

There are important differences in how Open.ai and Cohere are priced as well. These are summarized in this following table.

This comparison comes from the Open.ai pricing page and the Cohere pricing page.

How will pricing of LLMs evolve?

Pricing of large language models is at the beginning of its evolution. We are seeing differentiation emerge with Cohere’s approach to pricing with different pricing metrics for different use cases.

Cohere prices input and output tokes at the same rate (Open.ai prices output tokens at twice as much as input tokens)

Cohere is introducing pricing metrics other than tokens that are better adapted to specific use cases

Per classification for the Classify functionality

Per search unit for Search Rankings

Over time, as use cases emerge, one can expect to see more and more of these function based pricing metrics. Rather than pricing on input and output tokens, which are primarily of interest to engineers and are basically cost metrics, the pricing metrics will be based on the use case.

A good generative AI pricing metric will have the following properties.

Make it easy to predict revenue for the vendor and cost for the user

Connect usage to value

Be easy to scale across the full range of possible use

There are many different use cases for content generation AIs and we are just beginning to explore the possibilities. The current dominance of token based pricing and site based pricing will likely be quickly overtaken by use case pricing metrics. This is something to look forward to as it will let people adopting this new technology align price with their own business model.